Cracking Image Classification with CNNs: Building an Intelligent Classifier for CIFAR-10

- Julia Johnson

- Sep 19, 2024

- 4 min read

Updated: Jun 15, 2025

Project Overview

This project focuses on building an image classification system using machine learning techniques. The goal was to develop a model that can accurately identify and categorize images into predefined classes. By leveraging popular libraries such as Python's TensorFlow and Keras, I trained and evaluated a convolutional neural network (CNN) on a dataset of labeled images. This hands-on project helped me deepen my understanding of deep learning concepts and the practical steps required to create an effective image recognition solution.

Tools & Technologies

For this project, I used Python as the main programming language, along with powerful libraries such as TensorFlow and Keras for building and training the convolutional neural network. To preprocess and manage the image data, I utilized libraries like NumPy and OpenCV. Additionally, Jupyter Notebook served as my development environment, allowing me to experiment with code interactively and visualize results in real time. These tools combined enabled me to create an efficient and effective image classification model.

Approach & Methodology

I began by selecting a labeled dataset suitable for classification such as CIFAR-10 or a custom dataset of common objects. The images were preprocessed through resizing, normalization, and data augmentation to improve model performance and generalization.

Next, I designed a Convolutional Neural Network (CNN) architecture with multiple convolutional and pooling layers followed by dense layers. The model was compiled using the Adam optimizer and categorical cross entropy as the loss function. I trained the network over multiple epochs, fine-tuned hyperparameters, and monitored the model’s performance using accuracy and loss metrics on both training and validation datasets.

To evaluate the model, I tested it on unseen images and analyzed the classification report and confusion matrix. I also visualized predictions to better understand where the model succeeded and where improvements were needed.

Challenges & Lessons Learned

One of the biggest challenges I faced was getting the model to generalize well without overfitting. In the beginning, my model performed well on the training data but struggled with validation accuracy. I tackled this by implementing data augmentation, dropout layers, and experimenting with different model architectures.

Another challenge was understanding the intricate behavior of CNN layers and how hyperparameters like kernel size, number of filters, and learning rate impacted performance. Through lots of trial and error, debugging, and reading documentation and forums, I gained a stronger grasp of how these components work together to affect model accuracy.

This project taught me the value of patience, experimentation, and consistent learning. It also boosted my confidence in working with machine learning tools and deep learning techniques skills that are highly valuable in the tech industry.

Dataset Overview

The CIFAR-10 dataset consists of 60,000 images in 10 categories:

Airplane

Automobile

Bird

Cat

Deer

Dog

Frog

Horse

Ship

Truck

These images are small and low resolution (32x32), making them a perfect dataset for experimenting with CNNs. The dataset is divided into 50,000 training images and 10,000 test images.

Step-by-Step Process

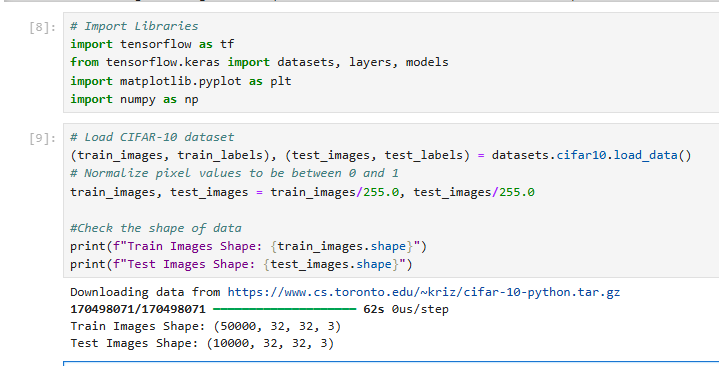

Step 1: Data Preprocessing

I started by loading the CIFAR-10 dataset using TensorFlow's Keras Library. The images were normalized by dividing pixel values by 225 to make them fall within the [0,1] range. This helped in speeding up the convergence during model training.

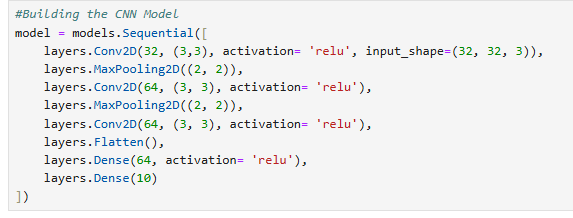

Step 2: Building the CNN Model

To classify the images, I built a Convolutional Neural Network (CNN). The model consisted of several layers:

Convolutional layers to capture features from the images.

Max Pooling Layers to down-sample the data and reduce computational complexity.

Fully connected layers for classification into one of the 10 categories.

The architecture included three convolutional layers, each followed by a pooling layer, and finally two fully connected (dense) layers.

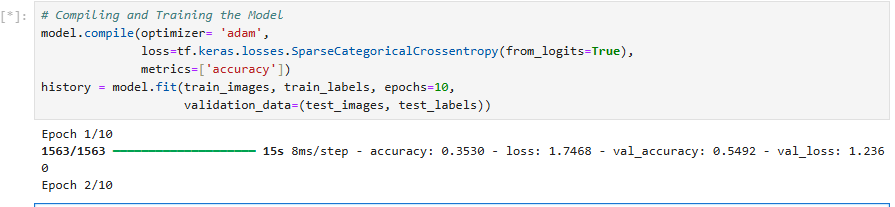

Step 3: Compiling and Training the Model

I compiled the model using the Adam optimizer and the Sparse Categorical Cross entropy loss function. The network was trained over 10 epochs with both training and validation data to monitor performance.

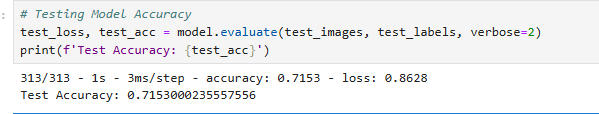

Step 4: Testing the Model for Accuracy

After training, I tested the model on the 10,000 test images to evaluate how well it generalizes to new data. The testing accuracy was calculated using the following line of code:

Results

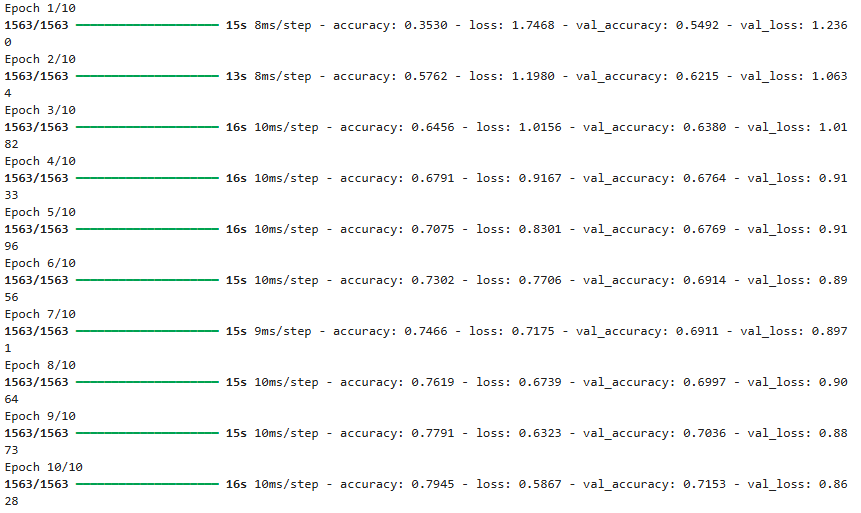

Training Accuracy: The model showed significant improvement in training accuracy as the number of epochs increased.

Test Accuracy: After the final evaluation, the model achieved a test accuracy of approximately 71%. This means that the model correctly classified 71% of the test images into their respective categories.

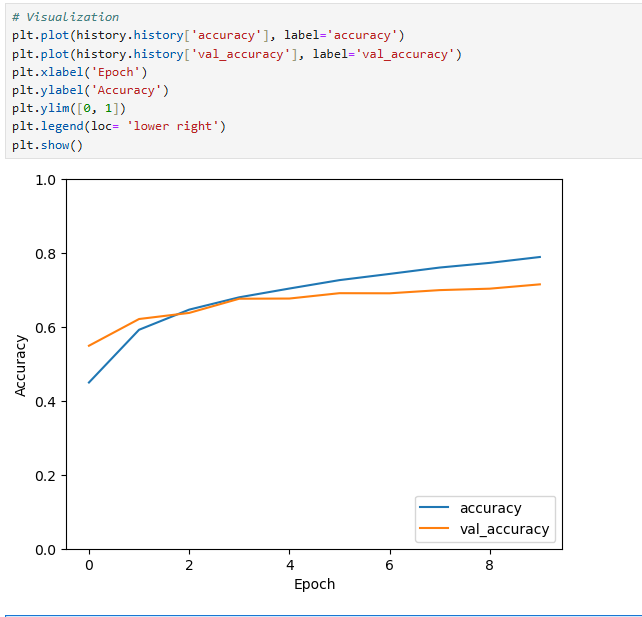

Step 5: Visualizing the Training Process

To gain insights into how the model performed over time, I plotted the training and validation accuracy after each epoch. This helped me understand whether the model was overfitting or underfitting.

The plot showed that both training and validation accuracy improved steadily, though there was a slight gap, indicating that the model could benefit from techniques like data augmentation or dropout to prevent overfitting.

Insights from the Project

Convolutional Neural Networks are incredibly effective for image classification tasks. They can capture spatial features like edges and textures through the use of convolutional layers.

Regularization techniques such as dropout could help in reducing the slight overfitting that occurred, where the model performed better on the training set than on the test set.

Data Augmentation could help increase the diversity pf the training data and improve generalization.

Future Work

Hyperparameter Tuning: Adjusting parameters such as the number of layers, filter sizes and learning rates could improve performance.

Transfer Learning: Using a pre-trained model like VGG16 or ResNet could significantly boost performance with limited training data.

Data Augmentation: Adding transformations like rotations, flips, and zoom could make the model more robust and prevent overfitting.

Conclusion

In this project, I built and evaluated a CNN model to classify images in the CIFAR-10 dataset. While the model achieved a reasonable accuracy of 71%, there is still room for improvement through regularization and data augmentation. This project has provided me with a deeper understanding of CNNs, and I look forward to applying these techniques to more complex datasets in the future.

Comments