Predicting House Prices with Machine Learning: A Step by Step Guide to Building a Regression Model

- Julia Johnson

- Sep 20, 2024

- 4 min read

Introduction

Welcome back to my blog! In this post, we'll dive into the world of Machine Learning by tackling a regression problem using a dataset on house prices. The goal of this project is to predict the sale price of houses based on various features like the number of rooms, square footage, location, etc.

By the end of this project, you'll not only have a working regression model but also an understanding of how to apply machine learning techniques to real-world problems. Let's dive into the step-by-step process of building this model.

Project Overview

In this project, we'll:

Load and explore the dataset to understand the features and clean any missing data.

Build a regression model using popular machine learning algorithms to predict house prices.

Test and evaluate the accuracy of the model to ensure it generalizes well to new data.

Visualize insights gained from the model to understand feature importance and relationships in the data.

This project will help solidify concepts around regression, feature selection, model evaluation, and data visualization.

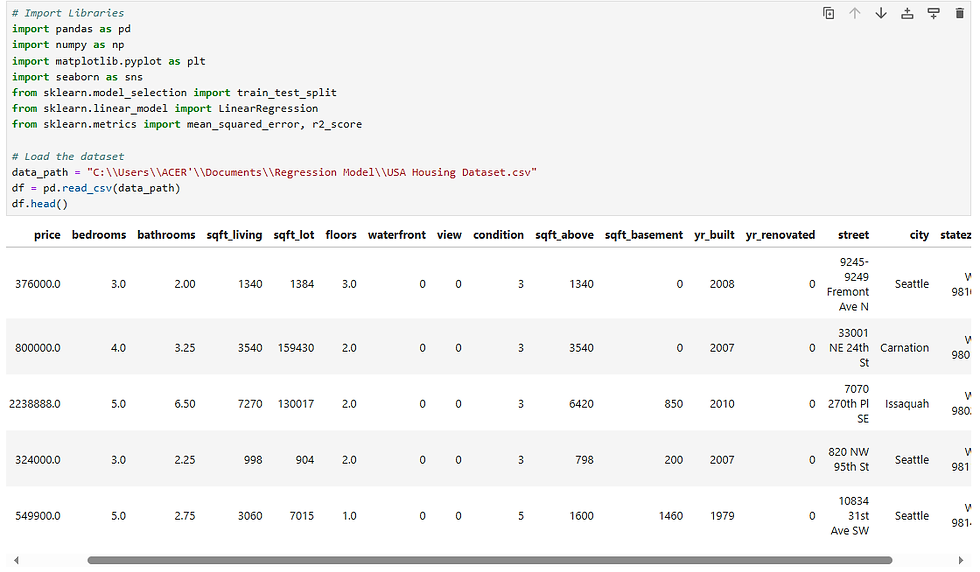

Step 1: Importing Libraries and Loading the Dataset

To Begin, let's import the necessary Python Libraries and load our dataset.

The dataset contains various features like the number of bedrooms, bathrooms, lot size, and most importantly, the Sale Price, which we want to predict.

Step 2: Exploratory Data Analysis (EDA) and Visualization

Before jumping into building the model, we need to explore the data to understand its structure, spot missing values, and detect any correlations. Because the street, city, 'statezip', and country is not needed in the dataset I am going to drop the columns.

Insights from EDA:

We can observe which features are highly correlated with Sale Price. For instance, features like sqft_living, bathrooms, and sqft_above(above-ground living area) tend to have high positive correlations.

We'll drop or fill any missing values before proceeding.

Step 3: Data Preprocessing

Next, let's handle any missing values and prepare the data for training.

Step 4: Building the Regression Model

In this project, I'll use Linear Regression, one of the most popular algorithms for regression tasks. This simple model works well when there is a linear relationship between features and the target variable.

Step 5: Testing for Accuracy

To measure the performance of our model, we'll use Mean Squared Error (MSE) and R-squared. These metrics will tell us how close our predictions are to the actual values.

As you can see the model's R2 Score explains that about 32.36% of the variance in the target variable (house prices). Suggests that the model is not performing very well, as a significant portion of the variation in house prices is not being captured by the model.

What Could Cause a Low R2 Score?

Insufficient or irrelevant features: The features used to predict the target variable may not be strong enough predictors.

If there is a non-linear relationship between the features and the target, a simple linear regression model may not capture that complexity. In this case we can try models like Random Forest or Gradient Boosting it could give us a better result.

Data quality Issues, missing values, outliers or incorrectly formatted data can affect the accuracy.

Multicollinearity: If some of the features are highly correlated with each other, it can make it difficult for the model to assign appropriate weights to each feature, lowering its performance.

Step 6: Visualizing the Results

To better understand the performance of the model, its's useful to visualize the predicted versus actual values.

Insight from Visualization:

The scatter plot shows the relationship between the actual and predicted sale prices. Ideally, the points should lie close to the diagonal line (where predicted values equal actual values).

We can observe that while many points align well, there are outliers where the model's predictions deviate from the actual values, indicating potential areas for improvement.

What I Learned from This Project

Completing this project allowed me to gain several valuable insights into the regression modeling process, including:

Feature Importance Matters, selecting the right features significantly impacts model performance.

Handling Missing Data, missing values can skew predictions, so it's important to handle them appropriately before training the model.

3. Simple models can be effective, even though Linear Regression is a simple model, it can still provide good predictions for problems where a linear relationship exists between features and the target variable.

Model Evaluation is key, Evaluating the model using metrics like MSE and R2 helped me understand where the model excels and where improvements are needed.

Strategies to help improve the model:

Add more relevant features, select features that might have a stronger impact on the target variable (house prices). Or create new features by combining existing ones.

Use nonlinear models, if a linear regression model doesn't perform well, try experimenting with other regression models that can capture more complex relationships. Such as Random Forest Regression, XGBoost, or Gradient Boosting Regression.

Conclusion

In this project, we built a Linear Regression model to predict house prices based on several features. We explored the data, built the model and evaluated its performance using MSE and R2. The model was not successful, explaining about 32% of the variance in the house prices. However, there's always room for improvement, and in future projects, I plan to experiment with more advanced models and techniques to enhance accuracy.

This project reinforced the importance of data preprocessing, model evaluation, and visualization in machine learning workflows. I'm excited to continue improving my skills and apply what I've learned to more complex challenges.

Feel free to check out my other blog posts and projects on this site, and stay tuned for more insights and tutorials in data science and machine learning!

Comments